introduction

CFEngine and Puppet are configuration management tools that can help you automate IT infrastructure. Practical examples include adding local users, installing Apache, and making sure password-based authentication in sshd is turned off. The more servers and complexity you have, the more interesting such a solution becomes for you.

In this test, we set out to explore the performance of the tools as the environment scales, both in terms of nodes and policy/manifest size. Amazon EC2 is used to easily increase the size of the environment. This test is primarily a response to the comments in this older test.

I want to start with a few disclaimers:

- I am affiliated with CFEngine (the company), and so it is extremely important for me to provide all the details so the test procedure and results can be scrutinized and reproduced. I would love for some of you to create independent and alternative tests.

- The exact numbers in this test do probably not map directly to your environment, as everybody’s environment is a little different (especially in hardware, node count, policy/manifest). The goal is therefore to identify trends and degree of differences, not so much exact numbers.

test setup

Instance types:

- t1.micro: 2 ECUs, 1 core, 615 MB memory — for CFEngine client / Puppet node

- m1.xlarge: 8 ECUs, 4 cores, 15 GB memory — for CFEngine policy server / Puppet master

Operating system

- Ubuntu 12.04 64-bit (all hosts).

Software versions

- CFEngine 3.3.5 (package from the CFEngine web site)

- Puppet 2.7.19 (using apt-get with http://apt.puppetlabs.com)

For simplicity, all ports were left open during the tests (the “Everything” security group by Amazon was used).

Both tools were set to run every 5 minutes.

test procedure

The policy server/puppet master were set up manually first, as described in detail below. For test efficiency reasons, they were set to accept new clients automatically (trusting all keys or autosigning).

Clients were added in steps of 50, up to 300 every 15 minutes (there wasn’t enough time to go higher). The manifest/policy was changed twice during the test, to see what impact this had. These were the exact steps taken:

| TIME | CLIENT COUNT | POLICY/MANIFEST |

|---|---|---|

| 0:00 | 50 | Apache |

| 0:15 | 100 | Apache |

| 0:30 | 150 | Apache |

| 0:45 | 200 | Apache |

| 1:00 | 200 | Apache, 100 echo commands |

| 1:15 | 250 | Apache, 100 echo commands |

| 1:30 | 250 | Apache, 200 echo commands |

| 1:45 | 300 | Apache, 200 echo commands |

CPU usage at the policy server/master was measured with Amazon CloudWatch. Client run-time was measured by picking a random client and invoking a manual run of each tool in the time utility. Each run-time was measured three times and the average was taken.

The policy/manifest was changed twice, by adding 100 echo commands each time (run /bin/echo 1, /bin/echo 2,… /bin/echo 100) to see how the tools handled a simple increase in work-size.

setting up the cfengine policy server

The Ubuntu 12.04 package was found and downloaded at the CFEngine web site. In order to save time and money, this package was uploaded to the Amazon S3 for the clients to download (internal Amazon communication is free on EC2). Note that I could have used the CFEngine apt repository, but since CFEngine is just one package I chose to install it directly with dpkg. The following steps were carried out:

- wget https://s3.amazonaws.com/my-amazon-s3-bucket/cfengine-community_3.3.5_amd64.deb

- dpkg -i cfengine-community_3.3.5_amd64.deb

- Install the policy to /var/cfengine/masterfiles

- /var/cfengine/bin/cf-agent –bootstrap –policy-server 10.4.67.126

The policy contains three promises.cf files, as described in the test procedure above (one for Apache-only, one for Apache and 100 echo commands, one for Apache and 200 echo commands).

The installation size was 7.8 MB (1 package).

adding cfengine nodes

The following steps were carried out to bootstrap a CFEngine node to the policy server.

- wget https://s3.amazonaws.com/my-amazon-s3-bucket/cfengine-community_3.3.5_amd64.deb

- dpkg -i cfengine-community_3.3.5_amd64.deb

- /var/cfengine/bin/cf-agent –bootstrap –policy-server 10.4.67.126

A Amazon user-data script was created and used to bootstrap new nodes. The installation size was 7.8 MB (1 package). The exact command used to create new instances was the following:

# ec2-run-instances ami-137bcf7a -key amazon-key –user-data-file ./user-data-cfengine-ubuntu124 –instance-type t1.micro –instance-count 50 –group sg-52f5103a -availability-zone us-east-1d

setting up the puppet master

It is important to note that the default Puppet master configuration is not production ready according to the documentation: “The default web server is simpler to configure and better for testing, but cannot support real-life workloads”.

The main reason for this is that Ruby does not support multi-threading, so puppetmasterd can only handle one connection at the time. This would limit the scale to just tens of clients, which is too low for our purposes.

The recommended way to get around this is to create an Apache proxy balancer with the passenger extension that receives all connections and hands them over Puppet. There are some documents that describe this for Red Hat and Debian. The configuration is quite complex, so I used a Ubuntu 12.04, which supports a package that contains the necessary configuration.

These steps were taken to install and set up the Puppet master with passenger:

- # wget http://apt.puppetlabs.com/puppetlabs-release-precise.deb

- # dpkg -i puppetlabs-release-precise.deb

- # apt-get update

- # echo “10.4.217.221 puppet” >> /etc/hosts

- # apt-get install puppetmaster-passenger

- Install the manifest to /etc/puppet.

The manifest contains three site.pp files, as described in the test procedure above (one for Apache-only, one for Apache and 100 echo commands, one for Apache and 200 echo commands).

The installation size was 55 packages, 135 MB.

adding puppet nodes

The following steps were carried out to add a puppet node to the master.

- wget http://apt.puppetlabs.com/puppetlabs-release-precise.deb

- dpkg -i puppetlabs-release-precise.deb

- apt-get update

- apt-get –assume-yes install puppet

- echo “10.4.217.221 puppet” >> /etc/hosts

- puppet agent –verbose –onetime –no-daemonize –waitforcert 120

- echo “START=yes

DAEMON_OPTS=\”\”” > /etc/default/puppet - echo “[main]

logdir=/var/log/puppet

vardir=/var/lib/puppet

ssldir=/var/lib/puppet/ssl

rundir=/var/run/puppet

factpath=$vardir/lib/facter

templatedir=$confdir/templates

runinterval=300

splay=true[master]

# These are needed when the puppetmaster is run by passenger

# and can safely be removed if webrick is used.

ssl_client_header = SSL_CLIENT_S_DN

ssl_client_verify_header = SSL_CLIENT_VERIFY

” > /etc/puppet/puppet.conf - /etc/init.d/puppet start

This was all incorporated into a script, which was run with Amazon user-data (at boot) to quickly deploy new clients. The installation size was 11.9 MB, 14 packages.

The exact command line used was this:

# ec2-run-instances ami-137bcf7a -key amazon-key –user-data-file ./user-data-puppet-ubuntu124 –instance-type t1.micro –instance-count 50 –group sg-52f5103a -availability-zone us-east-1d

results

server-side cpu usage

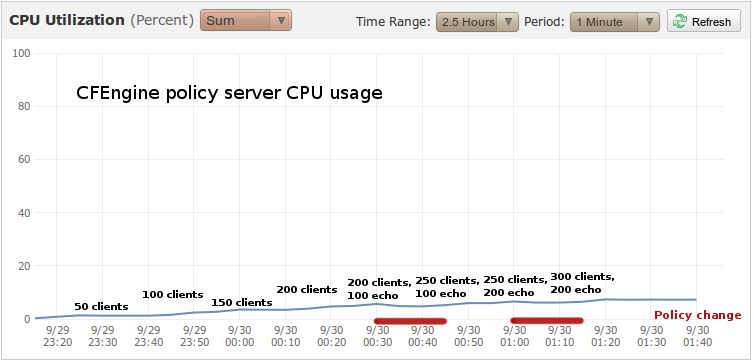

These graphs were taken directly from Amazon CloudWatch at the master/server instance.

From the graphs, we can see that the Puppet master instance uses about 10 times as much CPU at 50 clients. At 300 clients with 200 echo commands, the Puppet master uses about 18 times as much CPU.

The most interesting points, though, are not when we increase the client count, but increase client work in the policy/manifest.

This happens where we add 100 extra echo commands and the following 15 minutes when they are run. These areas are indicated by red lines in both graphs:

- 200 clients, 100 echo commands (until we go to 250 clients)

- 250 clients, 200 echo commands (until we go to 300 clients)

The reason this is more interesting is that users will probably extend the policy/manifest more frequently than add nodes. How does changing the policy/manifest impact the server?

We can clearly see that the Puppet master is heavily impacted by changing the manifest, while the CFEngine policy server seems unaffected by the changes (the load increase at the red lines).

client-side execution time

The data for the client execution time was captured with three runs of time /var/cfengine/bin/cf-agent -K and time puppet apply --onetime --no-daemonize, respectively. The resulting data-files for CFEngine and Puppet are also provided. If we calculate averages, the graph for comparing will look like the following (the ods file is available here).

At 50 hosts with just the Apache configuration, CFEngine agents run 20 times faster than Puppet agents. At 300 hosts, with 200 echo commands, CFEngine agents run 166 times faster than Puppet agents.

Note that some spikes at 200c,100e and 250c,200e are to be expected since we added 100 more echo commands in the policy/manifest at these points. At 100c,a the Puppet agent had one very long run (as shown in the data files), which caused a spike there for Puppet.

The individual charts compare each tool to itself more easily — does the client execution time increase much when only the number of nodes increases?

For completeness, the client execution results are provided in tabular form below.

| ENVIRONMENT | CFENGINE TIME (SECONDS) | PUPPET TIME (SECONDS) |

|---|---|---|

| 50c,a | 0.172 | 3.427 |

| 100c,a | 0.173 | 19.24 |

| 150c,a | 0.172 | 3.63 |

| 200c,a | 0.178 | 3.63 |

| 200c,100e | 0.481 | 22.408 |

| 250c,100e | 0.459 | 32.56 |

| 250c,200e | 0.742 | 106.4 |

| 300c,200e | 0.732 | 121.86 |

final remarks

It is clear that CFEngine is much more efficient and vertically scalable than Puppet. This is probably due to two items:

- Puppet’s architecture is heavily centralised, the Puppet master does a lot of work for every client – especially with cataloge compilation. In contrast, CFEngine agents will interpret the policy in a distributed fashion. The CFEngine policy server is just a file server.

- Puppet runs under the Ruby interpreter, which is far less efficient than running natively on the operating system.

The most interesting observation, I think, is that the Puppet master and agent performance were heavily influenced by the manifest complexity. When the manifest is small, increasing the agent count did not have much impact on the agent performance. However, as the manifest grew, the performance of all the agents (and the master) degraded significantly. It is also evident that as the master gets more loaded, all the Puppet agents run slower. This can also probably be attributed to the heavily centralised architecture of Puppet.

It would be interesting to create a more real-world policy/manifest to explore this further. The manifest in this test did not have much dependencies, and so the Puppet resource dependency graph was quite simple. If the dependency graph was more realistic – would that have had impact on the test results?

This post was primarily created due to feedback gotten from an older post on the same matter. Please don’t hesitate to add your comments below!